The Issue of Codex CLI Only Supporting WSL2 on Windows

Currently, Codex CLI only supports WSL2 on Windows. Therefore, I looked up how to install WSL2 with Ubuntu 24.04 online and installed it. I also installed Codex CLI by following the instructions on the deepwiki below.

https://deepwiki.com/openai/codex

Below is an example of an MCP for Codex CLI from deepwiki.

~/.codex/config.toml:

|

|

This is where it stops working for local MCPs.

WSL2 Mirrored Mode Networking

Since Windows 11 version 22H2, there is a feature called mirrored mode.

https://learn.microsoft.com/en-us/windows/wsl/networking#mirrored-mode-networking

Using this allows you to connect to a URL like http://127.0.0.1:8000 from both the Windows host and the WSL2 Ubuntu guest.

Set it up as follows

C:\Users\<user>\.wslconfig:

|

|

This enabled the use of local, network-based MCPs. The following is an MCP example for Blender in the Codex CLI.

~/.codex/config.toml:

|

|

Stdio MCP on WSL2

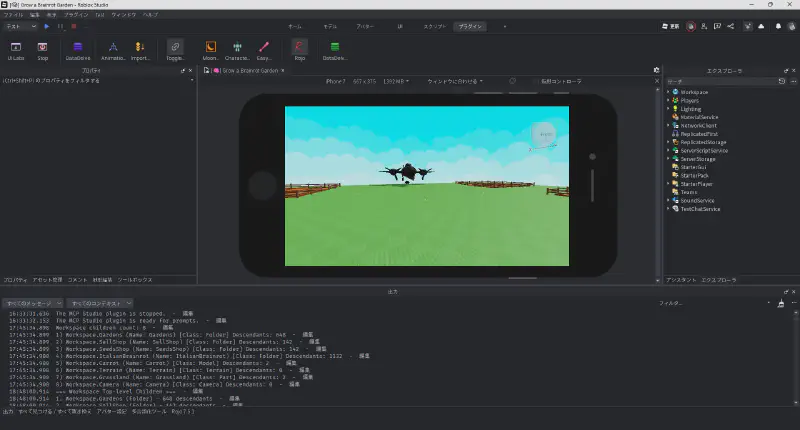

I used an MCP called studio-rust-mcp-server to control Roblox Studio.

I specified the Windows-built executable, but it did not work correctly.

After trying various things, as a last resort, I built it on Ubuntu, and it worked. I wrote the usage instructions in the README.md.

https://github.com/takoyakisoft/studio-rust-mcp-server-wsl2

~/.codex/config.toml:

|

|

I gave up on using the supergateway, which converts MCP stdio to SSE, because it produced an error when connecting with Roblox Studio.

Bonus: Setting gpt-5-mini as the Default

Although gpt-5 is the default, it also works well with gpt-5-mini.

~/.codex/config.toml:

|

|